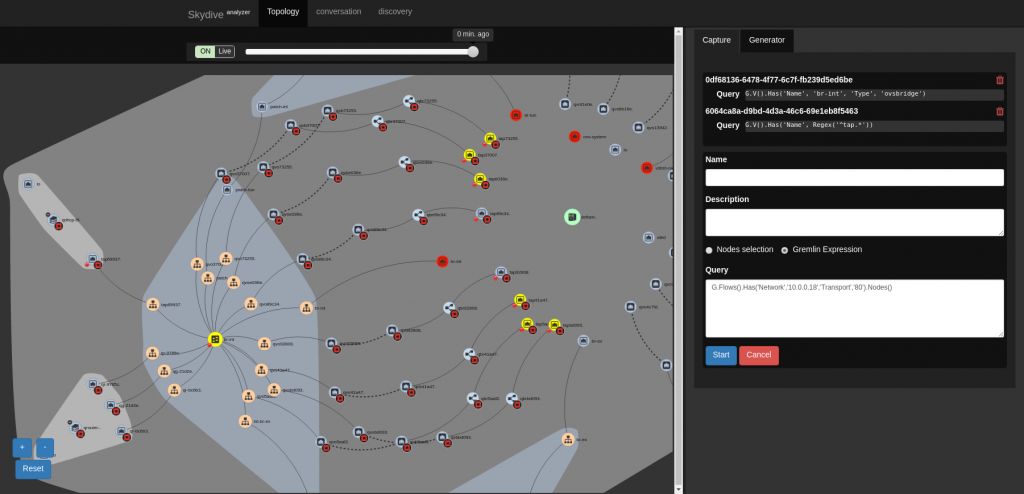

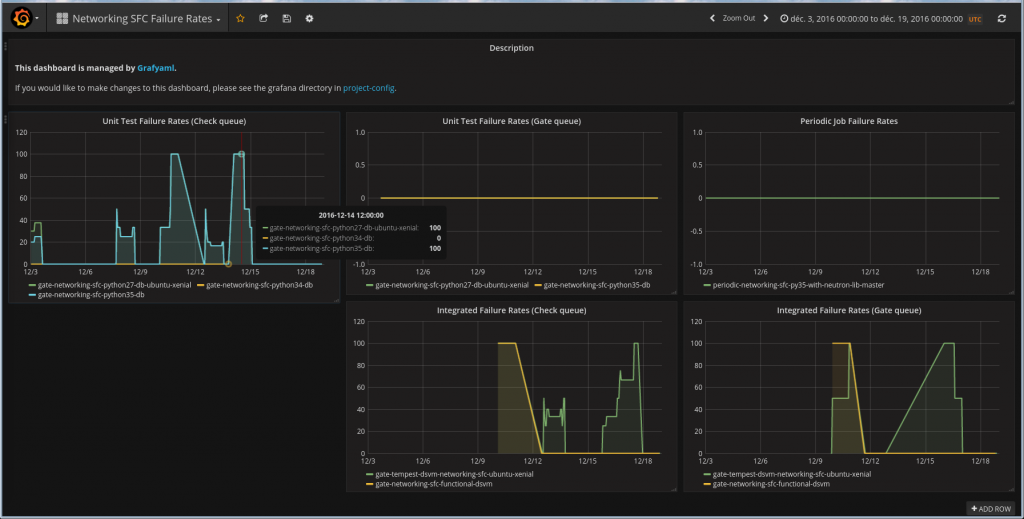

After a short detour on how Skydive can help debugging Service Function Chaining, in this post I will give details on the new RPM files now available in RDO to install networking-sfc. We will go through a complete TripleO demo deployment, and configure the different nodes to enable networking-sfc components.

Note that most steps will be quite generic, so can be used to install networking-sfc on other OpenStack setups!

Running tripleo-quickstart

To quickly get a TripleO setup up and running, I used tripleo-quickstart with most default options. So prepare a big enough machine (I used a 32GB one), that you can SSH in as root with password, get this helpful script and run it on master “release”:

$ ./quickstart.sh --install-deps

$ ./quickstart.sh -R master -t all ${VIRTHOST}

After some coffee cups, you should get an undercloud node, an overcloud with one compute node and one controller node, all running inside your test system.

Accessing the nodes

A quick tip here: if you want to have an easy access to all nodes and web interfaces, I recommend sshuttle, the “poor man’s VPN”. Once installed (it is available in Fedora and Gentoo at least), run this command (as root):

# sshuttle -e "ssh -F /home/YOUR_USER/.quickstart/ssh.config.ansible" -r undercloud -v 10.0.0.0/24 192.168.24.0/24

And now, you can directly access both undercloud IP addresses (192.168.x.x) and overcloud ones (10.x)! For example, you can take a look at the tripleo-ui web interface, which should be at http://192.168.24.1:3000/ (username:admin, password: find with “sudo hiera admin_password” on the undercloud)

As for SSH access, tripleo-quickstart created a configuration file to simplify the commands, so you can use these:

# undercloud login (where we can run CLI commands)

$ ssh -F ~/.quickstart/ssh.config.ansible undercloud

# overcloud compute node

$ ssh -F ~/.quickstart/ssh.config.ansible overcloud-novacompute-0

# overcloud controller node

$ ssh -F ~/.quickstart/ssh.config.ansible overcloud-controller-0

The undercloud has some interesting scripts and credential files (stackrc for the undercloud itself, overcloudrc for… the overcloud indeed). But let’s go back to SFC before.

Enable networking-sfc

First, install the networking-sfc RPM package on each node (we will run CLI and demo scripts from the undercloud, so do it on all three nodes):

# yum install -y python-networking-sfc

That was easy, right? OK, you still have to do the configuration steps manually (for now).

On the controller node(s), modify the neutron-server configuration file /etc/neutron/neutron.ini:

# Look for service_plugins line, add the SFC ones at the end # The service plugins Neutron will use (list value) service_plugins=router,qos,trunk,networking_sfc.services.flowclassifier.plugin.FlowClassifierPlugin,networking_sfc.services.sfc.plugin.SfcPlugin [...] # At the end, set the backends to use (in this case, the default OVS one) [sfc] drivers = ovs [flowclassifier] drivers = ovs

The controller is now configured. Now, create the SFC tables in the neutron database, and restart the neutron-server service:

$ sudo neutron-db-manage --subproject networking-sfc upgrade head

$ sudo systemctl restart neutron-server

Now for the compute node(s)! We will enable the SFC extension in the Open vSwitch agent, note that its configuration file can be different depending on your setup (you can confirm yours checking the output of “ps aux|grep agent”). In this demo, edit /etc/neutron/plugins/ml2/openvswitch_agent.ini

# This time, look for the extensions line, add sfc to it # Extensions list to use (list value) extensions =qos,sfc

And restart the agent:

$ sudo systemctl restart neutron-openvswitch-agent

Demo time

Congratulations, you successfully deployed a complete OpenStack setup and enabled SFC on it! To confirm it, connect to the undercloud and run some networking-sfc commands against the overcloud, they should run without errors:

$ source overcloudrc

(OVERCLOUD) $ neutron port-pair-list # Or "port-pair-create --help"

I updated my demo script for this tripleo-quickstart setup: in addition to the SFC-specific parts, it will also create basic networks, images, … and floating IP addresses for the demo VMs (we can not connect directly to the private addresses as we are not on the same node this time). Now, still on the undercloud, download an run the script:

$ git clone https://github.com/voyageur/openstack-scripts.git

$ ./openstack-scripts/simple_sfc_vms.sh

If all went well, you can read back a previous post to see how to test this setup, or go on your own and experiemnt.

As a short example to end this post, this will confirm that an HTTP request from the source VM does indeed visit a few systems on the way:

$ source overcloudrc

# Get the private IP for the destination VM

(OVERCLOUD) $ openstack server show -f value -c addresses dest_vm

private=172.24.4.9, 192.168.24.104

# Get the floating IP for the source VM

(OVERCLOUD) $ openstack server show -f value -c addresses source_vm

private=172.24.4.19, 192.168.24.107

(OVERCLOUD) $ ssh cirros@192.168.24.107

$ traceroute -n 172.24.4.9

traceroute to 172.24.4.9 (172.24.4.9), 30 hops max, 46 byte packets

1 172.24.4.13 23.700 ms 0.476 ms 0.320 ms

2 172.24.4.15 4.239 ms 0.467 ms 0.374 ms

3 172.24.4.9 0.941 ms 0.599 ms 0.429 ms

Yes, these networking-sfc RPM packages do seem to work 🙂